For companies planning to adopt artificial intelligence, selecting the right AI software development partner can define the success or failure of the entire initiative. The decision impacts everything from early model accuracy to long-term product stability and yes, of-course the team adoption a well.

While there are so many AI software vendors offering AI development services, the challenge is identifying which ones have the ability to align with your specific goals, data structure, and delivery expectations. Well, businesses face delays, rework, or budget overruns because of issues in evaluation during the selection process.

Thinking about bringing in an AI software development company? This guide helps you get clear on what to prepare on your side, what questions to ask, and how to tell if a AI service provider is the right long-term fit for your business.

Why AI projects need a different evaluation?

Traditional software development and AI product development require different evaluation methods. The delivery process, team setup, and long-term involvement vary. Applying the same criteria used for software vendors often results in mismatched expectations and poor outcomes.

Traditional software vendors vs AI software vendors

Most standard software vendors follow fixed-scope delivery. Their focus is on code, UI, and feature development based on defined requirements. This works for websites, CRMs, and backend systems. But AI software development companies handle learning systems and the programmed logic.

A reliable provider of AI development services works with uncertainty, iterates using data, and includes domain specialists in the process. Their teams are built differently, combining data scientists, ML engineers, infrastructure experts, and business analysts. This structure is essential to train models that improve over time.

Why delivery models, team structures, and post-launch work differ

In AI projects, development continues even after deployment. Models need to be monitored, tested against new data, and retrained periodically. AI software development agencies builds in these layers from day one. Traditional vendors may not provide this continuity.

The entire delivery model changes:

- Discovery involves data review, not just feature discussion

- Prototypes are tested with variables, not hardcoded logic

- Post-launch, success depends on performance feedback, not just uptime

This means selecting from the best AI software development companies requires deeper evaluation than a checklist.

Where traditional firms fall short in AI use cases

Many software firms accept AI projects without the structure or expertise. They treat models like features and fail to prepare for how AI behaves in production environments.

Common gaps include:

- No workflow for data preprocessing or labelling

- No systems for tracking model experiments or measuring drift

- Weak understanding of data privacy and ethics in AI software development

- No alignment with business goals or user context

These issues surface after project delivery when it becomes clear the model is not usable at scale.

The cost of choosing wrong in AI

Working with the wrong AI software development company leads to more than technical setbacks. It affects timelines, internal trust, and return on investment.

The typical impact includes:

- Models that perform well in testing but fail in live use

- Repeated cycles of rework with no measurable progress

- Teams losing time on debugging and clarification

- Loss of executive buy-in due to visible delays and missed targets

This makes vendor selection a business decision, not just a technical one.

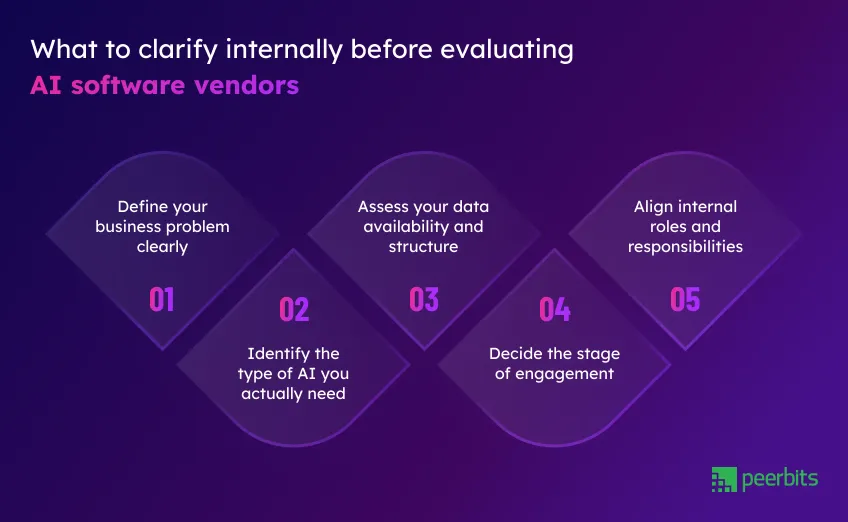

Preparation before choosing an AI software development company

Before reaching out to any AI software development company, it is important to prepare internally. The clarity you build at this stage directly impacts the success of vendor discussions and the quality of the solution delivered.

Define your business problem clearly

AI should always be mapped to a measurable outcome. Whether it is reducing manual processes, improving customer response time, or increasing forecast accuracy, the objective must be clear from the start.

Exploring AI development services without a defined use case often leads to scope confusion, misaligned expectations, and poor product-market fit. A structured problem statement helps vendors assess feasibility, estimate efforts, and recommend the right solution path.

Identify the type of AI you actually need

Not every vendor specializes in every AI category. Some excel in predictive models, others in natural language processing or computer vision. Define whether your solution requires:

- Recommendation systems

- Text or voice-based interfaces (NLP)

- Pattern recognition in data streams

- Computer vision for image or video analysis

Misalignment in this area often results in the selection of vendors who may deliver, but not efficiently.

Assess your data availability and structure

Any AI consulting services provider will require access to your data, even at the early stages of discovery. Before vendor meetings, evaluate:

- The volume and type of data you hold

- The ratio of structured to unstructured data

- Data labelling readiness

- Sensitivity and compliance requirements

Understanding your current level of data maturity gives the vendor a clear starting point and avoids unrealistic assumptions during scoping.

Decide the stage of engagement

Not all vendors support every phase of the product lifecycle. Some focus on early-stage prototyping, while others prefer production-scale delivery.

Define whether you need:

- A proof of concept (POC)

- A minimum viable product (MVP)

- A full-scale build

- A long-term partner for continuous improvement

Clear alignment on project stage helps you filter agencies and set the right budget and timeline expectations.

Align internal roles and responsibilities

Vendor success also depends on internal coordination. Define who from your team will be involved in:

- Requirement gathering and feedback loops

- Data access and clarification

- Review of technical milestones and model behavior

Assigning these roles early prevents delays, avoids duplication, and creates a smoother collaboration experience.

Ethical AI practices to look for in a partner

Every AI solution interacts with data that may be sensitive, regulated, or business-critical. A dependable AI software development company must follow responsible practices to ensure the model is fair, explainable, and compliant with applicable laws.

1. Bias control mechanisms

Bias in AI comes from imbalanced or incomplete training data. Vendors should have systems in place to audit data sources and evaluate model predictions across different user groups or scenarios.

Ask how they detect, measure, and respond to skewed outcomes. Their ability to flag biases early and adjust training cycles reflects their maturity in handling fairness when solutions impact hiring, lending, or healthcare decisions.

3. Data retention and compliance protocols

Clear rules around how customer data is handled are critical. Vendors must be transparent about:

- Whether they store your data

- Where the data is hosted

- How long it is retained

- What encryption and access controls are used

This is a technical and legal requirement. Data privacy in AI development is a shared responsibility, and your vendor must comply with local and global regulations.

3. Explainability and auditability of models

Some industries, like finance, healthcare, and insurance, require that every automated decision can be explained and audited. Models used in such environments must provide clear insights into how predictions were made.

Ask if the vendor offers model explainability tools. Can they show confidence scores, contributing variables, or decision thresholds? This level of transparency supports both internal stakeholders and external audits.

A provider that prioritizes data privacy and ethics in AI development will not treat these as optional. They will embed them into the design, testing, and deployment processes from the start.

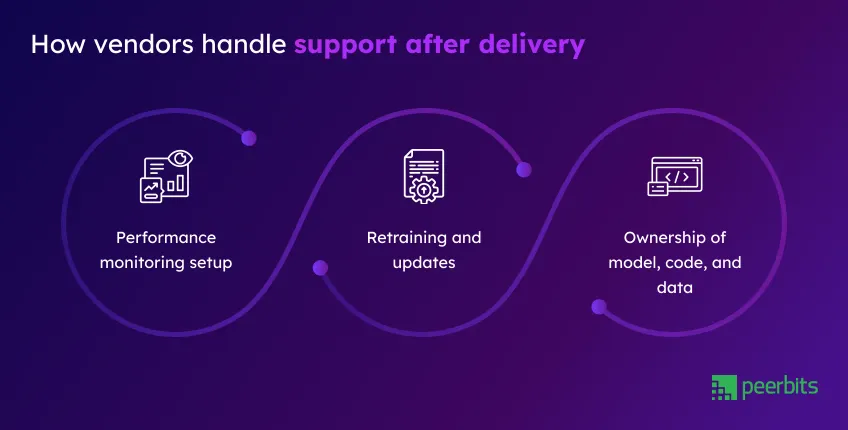

Post-deployment responsibility and support

Working with an AI software development company does not end at deployment. In fact, performance insights begin once the solution goes live. A good vendor prepares for this phase well in advance and stays involved to keep the system stable and relevant.

1. Performance monitoring setup

Ask if the vendor actively monitors how the model performs in production. Wondering how? Well, this includes:

- Tracking changes in accuracy over time

- Detecting model drift

- Logging infrastructure or runtime issues

Without monitoring, errors can go unnoticed, and decision quality may drop without any warning. A responsible team will have monitoring dashboards, performance thresholds, and alerts in place from day one.

2. Retraining and updates

No AI model stays accurate forever. Data changes, user behavior shifts, and external conditions evolve. Your vendor should define:

- What triggers a retraining cycle

- Whether retraining is manual, scheduled, or based on usage data

- How they manage versioning and rollback if needed

Vendors offering long-term AI development services will include this in their support plan, not treat it as an extra request later.

3. Ownership of model, code, and data

One of the most overlooked areas during vendor selection is ownership. Clarify:

- Who owns the trained model and the codebase

- Whether the vendor holds access rights to your data

- What happens if the engagement ends

The answers here should be clear and documented. This is where a trusted AI software development partner stands apart from vendors who prioritize short-term delivery.

The more clearly these areas are defined, the more confidence you will have in maintaining, scaling, or transitioning your AI system in the future.

Cost and pricing transparency

Evaluating the cost in AI software development is more than comparing numbers. The right vendor will break down their pricing model clearly, showing what is included at each stage and what additional costs may come later. A vague or overly simplified quote is often a red flag.

1. What is included in the quoted scope?

Before signing any proposal, confirm whether the price covers:

- Discovery and requirement workshops

- Data collection and cleanup

- Iterative model development and tuning

- Model validation and performance testing

- Post-deployment support and issue resolution

Some vendors present a base quote but add charges for iterations, feedback changes, or infrastructure. Others bundle these as part of a fixed engagement. Clear scope definition prevents hidden costs later.

3. Pricing structure across different phases

Ask how pricing is structured. Vendors typically follow one of these models:

- One-time fixed cost

- Milestone-based billing tied to deliverables

- Monthly retainer for ongoing work

Each model has its pros and trade-offs. Fixed pricing may work for smaller pilots, while long-term partnerships often shift to a retainer. Your choice should align with project stage and internal capacity.

Also check if pricing adjusts as the project moves from POC to full-scale rollout. Many companies underestimate how cost grows when moving from testing to real-world use.

3. Infra and cloud-related charges

Running AI systems involves significant infrastructure. Ask the vendor:

- Who manages and pays for hosting, storage, and training resources

- Whether cloud costs like GPU compute are estimated up front or charged monthly

- If they provide visibility into usage metrics or cloud billing dashboards

Vendors offering mature AI software development services will either integrate this into your existing environment or provide cost estimates based on realistic usage.

The more transparent the pricing structure, the easier it is to compare vendors, plan budgets, and justify the investment across internal teams.

Read more: Complete breakdown of AI app development cost 2025

Strategic and operational compatibility

An AI solution is not built in isolation. It involves frequent collaboration between your internal team and the vendor’s side. While technical skills matter, compatibility in communication, reporting, and issue handling is just as important.

Communication frequency and process

Consistent communication keeps expectations aligned and progress visible. Ask how often the team provides updates, what format they use, and who owns reporting.

- Do they follow a weekly update cycle?

- Are async check-ins documented?

- Is there a structured cadence for reporting to different stakeholder groups?

A good communication setup saves time, prevents misunderstandings, and avoids late-stage surprises.

Collaboration with non-technical stakeholders

AI projects impact departments beyond engineering. The vendor must be able to:

- Present insights to product owners

- Explain outcomes to leadership teams

- Walk strategy heads through performance reports or model behavior

This level of collaboration builds internal trust. It also increases adoption, since non-technical teams understand the value and logic behind the AI system.

Escalation and issue resolution policy

Delays, model underperformance, or system bugs are possible in any AI initiative. The difference lies in how the vendor responds.

Ask about their escalation path:

- Who owns resolution in case of model failure or performance drop?

- How fast is response time during production issues?

- Is there a shared tracker or support system?

These questions help evaluate whether you are working with a short-term vendor or a committed AI software development partner who plans for real-world conditions.

Read more: Evaluating Tools and Platforms for Effective AI Integration

Smart questions to ask during evaluation

Asking the right questions during early conversations can quickly reveal how prepared a vendor is to handle real business challenges. A serious AI software development company will answer these with clarity and confidence.

Here are some questions worth asking:

1. What are your AI success metrics?

Vendors should define success beyond just "working models." Ask how they measure effectiveness, whether it is accuracy, precision, user adoption, or cost savings. Mature teams can map AI software development company success metrics directly to your business goals.

2. Can you show your experiment tracking reports?

Experiment tracking shows how they manage model versions, test results, and performance comparisons. It also reflects their internal discipline and readiness for iterative work.

3. How do you handle changes in business logic mid-project?

Scope shifts are common, especially in AI. You need a vendor who can adapt quickly without resetting the entire pipeline. Ask how they manage mid-project adjustments, and how those changes impact timeline and cost.

4. What happens if the initial model does not hit target accuracy?

Early models may not always perform as expected. A confident team will have a fallback plan, whether that includes retraining, additional data cycles, or alternate modeling strategies. Avoid vendors who make unrealistic accuracy promises upfront.

5. Do you offer shared Slack or direct communication channels?

Smooth collaboration depends on how accessible the team is during the build phase. Check if they support shared Slack workspaces, direct messaging, or structured stand-ups. Clear lines of communication reduce delays and help surface blockers early.

Risks to know before hiring an AI development company

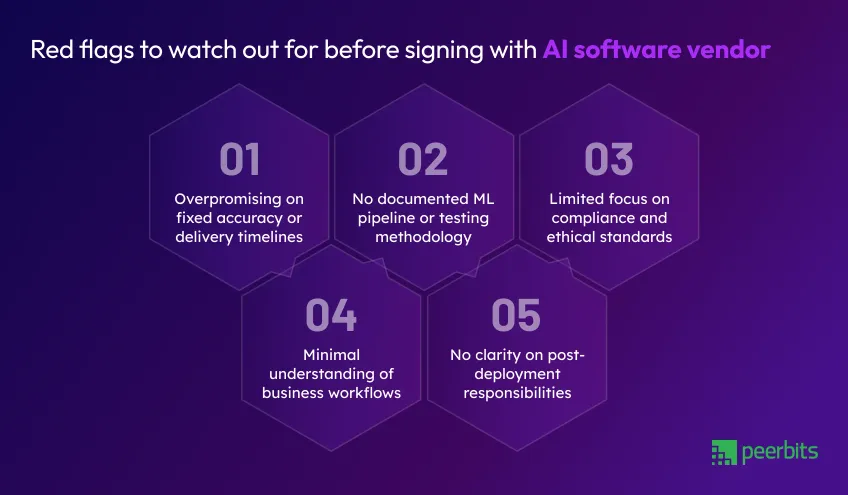

A well-structured vendor evaluation should include a close look at how potential partners manage expectations, compliance, delivery standards, and ongoing support. The points below highlight signals that may indicate risk or misalignment.

1. Overpromising on fixed accuracy or delivery timelines

Any reliable AI software development company understands that early accuracy and delivery speed depend on multiple variables, including data quality, scope clarity, and business constraints. Guaranteed benchmarks without context can be a sign of shallow evaluation or sales-driven promises.

2. No documented ML pipeline or testing methodology

Ask for insight into how the vendor handles model versioning, training cycles, and performance validation. If they cannot share structured workflows or past project references, it may indicate a lack of repeatable processes or operational maturity.

3. Limited focus on compliance and ethical standards

Responsible AI development includes discussions around data privacy and ethics in AI development, bias controls, and regulatory requirements. If the vendor avoids or downplays these topics, it raises concerns about long-term fit, especially in regulated industries.

4. Minimal understanding of business workflows

AI solutions must integrate with real operational flows. A vendor who focuses entirely on code and technical execution, without mapping use cases to internal systems or end-user behavior, may deliver a solution that is technically sound but difficult to adopt.

5. No clarity on post-deployment responsibilities

Long-term value comes from how AI systems evolve after deployment. Vendors should provide visibility into monitoring, retraining, and support models. A lack of post-deployment planning often points to short-term delivery focus rather than a full partnership mindset.

What successful AI partnerships look like

A strong AI engagement is about technical delivery and creating alignment between business goals, technical direction, and continuous collaboration. Whether you are building a proof of concept or a long-term solution, the signs of a successful partnership are clear and measurable.

Project goals are translated into technical execution

In a healthy collaboration, your business objectives are fully understood, and the technical design reflects them. A capable AI software development partner will help shape the roadmap, break down abstract goals into measurable KPIs, and build models that tie back to those outcomes.

This clarity forms part of the criteria for selecting top AI software development companies, when your project involves multiple teams and high-impact decisions.

Internal teams feel supported, not overwhelmed

A good partner deliver code and provide structure, clarity, and responsiveness. Your team should feel informed at every stage, with support during data prep, discovery, testing, and deployment. The vendor’s role is to guide without overwhelming.

This kind of steady, reliable collaboration is often what separates a trusted AI vendor for business from a team focused only on technical delivery.

Short feedback loops and well-documented decisions

Successful vendors maintain short review cycles and communicate clearly. Updates are regular, feedback is applied quickly, and every major decision — from model changes to infrastructure setup — is documented and easy to trace.

This level of discipline often defines AI software development company success metrics that matter: lower rework, smoother collaboration, and stronger trust across teams.

Model usage reflects real-world impact

The clearest sign of success is when your AI system is actually used. Not just tested, but adopted by internal users, integrated into workflows, and delivering value.

When models help reduce time, improve accuracy, or automate decisions effectively, you know you’ve chosen a partner who understands how to move from proof to production. And that’s a quality every business should prioritize when filtering AI software development agencies.

Final checklist before you decide

Before choosing your AI software development partner, run through this checklist to stay clear on what matters:

- They’ve delivered live AI systems with real-world results

- Discovery sessions are structured and involve experienced people

- Data privacy, compliance, and bias checks are built into their process

- Their team includes specialists, not just developers working with AI tools

- They plan for monitoring, retraining, and long-term system stability

- Ownership terms around code, models, and data are clearly explained

- Pricing is transparent across planning, development, and rollout stages

- Communication is regular, responsive, and documented at each step

Checking these boxes gives you a stronger chance of forming a partnership that works long after the first deployment.

Conclusion

Choosing the right AI software development company takes more than a quick review of past work. What really counts is how well they understand your business, how carefully they handle your data, and how prepared they are to support the solution once it goes live.

A dependable team brings structure, clarity, and a focus on outcomes. They help translate your goals into workable systems, communicate throughout the process, and stay involved to monitor performance, fine-tune models, and guide adoption.

If you’re in the process of shortlisting partners or planning a new project, use the checklist above to make a clear and confident decision. And when needed, connect with teams who’ve built AI products that work at scale and continue to add value over time.

FAQs

It’s best to involve a vendor during the early exploration phase. This helps them guide you on feasibility, data needs, and solution design before major time or budget is committed.

A solid proposal should cover problem understanding, approach breakdown, data assumptions, delivery timelines, pricing structure, and post-launch support terms.

Yes. Many companies start with a proof of concept or MVP to validate assumptions, then scale to a larger solution. Just make sure the initial build is designed with future scaling in mind.

Not necessarily. A good AI development company should be able to work with non-technical stakeholders, explain things clearly, and guide you through decisions.

AI delivers real value in industries like healthcare, finance, logistics, retail, and manufacturing, especially where large volumes of data and repeatable decisions exist.

You don’t need perfect data to get started. The right partner will help assess your data readiness, suggest improvements, and design around limitations where needed.

Yes. Many AI companies are set up for global delivery. What matters more is structured communication, clear responsibilities, and aligned collaboration processes.