Are you into developing software? Then, you must know how the modern-day software industry demands speed, flexibility, and automation.

We’ve come far ahead of long release cycles and manual deployments. Instead, software businesses ship changes faster and more reliably to stay competitive.

Many variables affect software development. Therefore, you need a system that keeps delivery consistent, secure, and scalable. Continuous Integration and Continuous Delivery (CI/CD) is that system.

It helps handle existing complexity, especially when managing builds, ensuring stability, and handling deployment across environments. Implementing AWS CI/CD automation can make the pipeline more predictable, repeatable, and free of manual errors.

According to the 2023 DORA State of DevOps Report, elite teams deploy code multiple times daily, with change failure rates under 15% and recovery times under an hour. Getting such a level of performance requires super-optimized and streamlined CI/CD pipelines.

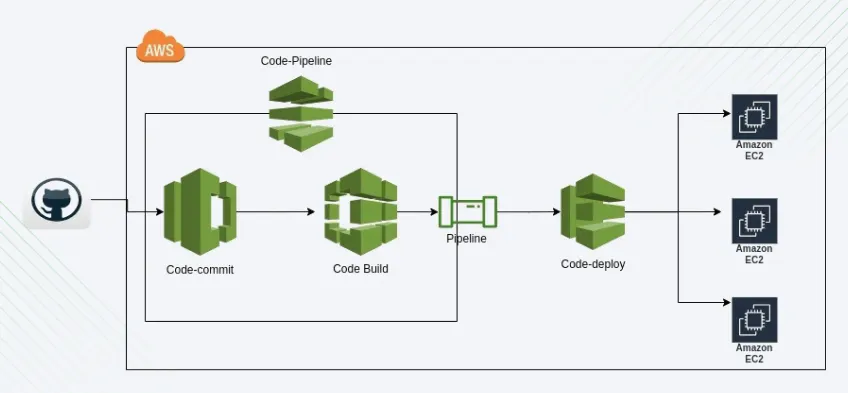

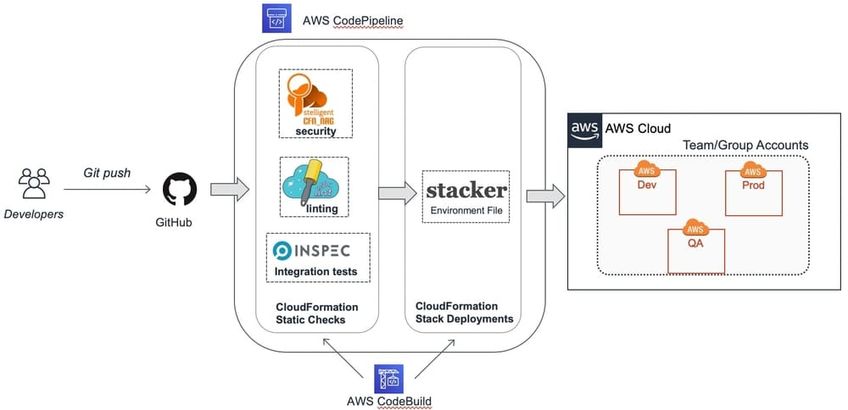

AWS CodePipeline and AWS CodeBuild power AWS CI/CD pipelines to offer a powerful framework for managing the whole software lifecycle. You can simplify and automate the build, test, and deploy stages while ensuring control, scalability, and visibility.

Up next, we will get into knowing how AWS CI/CD pipelines work and the steps to build those using AWS CodePipeline and CodeBuild.

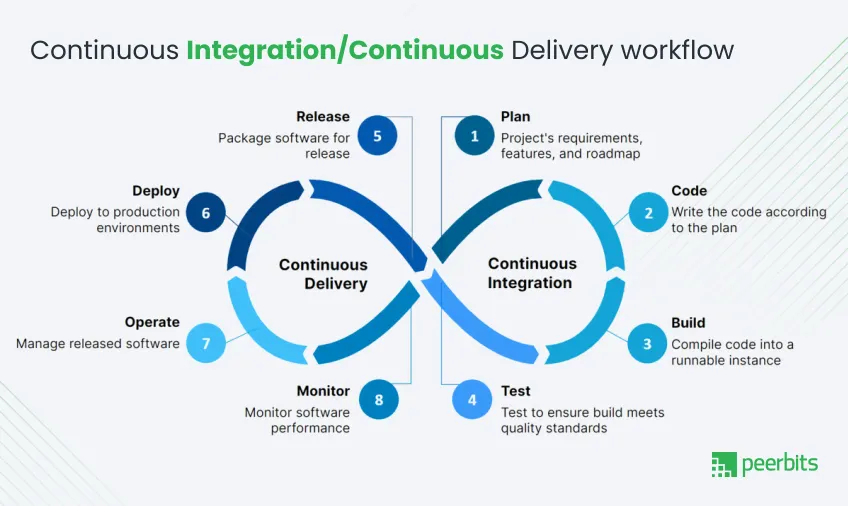

What are CI/CD Pipelines?

CI/CD is an acronym for Continuous Integration and Continuous Delivery/Deployment. It is defined as a set of practices enabling development teams to deliver code changes more frequently and reliably.

With CI/CD, you must aim to automate the software release process, reduce manual errors, and improve engineering efficiency.

Tools like AWS CodePipeline and AWS CodeBuild can help you implement AWS CI/CD pipelines more efficiently. This allows your teams to release updates rapidly and consistently across environments.

Key goals of a CI/CD pipeline

Using CI/CD Pipelines, you can aim to —

- Automate build, test, and deployment workflows to reduce manual intervention and accelerate delivery cycles.

- Improve deployment frequency and bring down the change failure rates with continuous delivery, where features are shipped in smaller, manageable batches, minimizing risk.

- Avail faster recovery times after failures through rollbacks and automated tests that offer quick fixes without downtime.

Common bottlenecks without streamlined CI/CD

Getting the right CI/CD pipelines is not easy. There are some major challenges you may encounter. Some of those are mentioned below —

- Manual code reviews may be causing delays, slowing down the flow of features into production.

- Inefficient build processes and slow feedback loops can make developers wait longer for test results and builds to complete.

- Lack of standardized deployment processes may lead to frequent rollback scenarios due to inconsistent environments, which can cause deployment failures.

AWS CodePipeline and CodeBuild

Let’s discuss the two AWS services that power most serious CI/CD pipelines.

AWS CodePipeline helps automate your software release process. You define your pipeline stages, and it takes care of coordinating everything from source to production.

CodePipeline works well with GitHub, Bitbucket, and AWS CodeCommit and supports all the integrations you need to keep your releases tight.

Major traits of AWS CodePipeline:

- Fully managed, so no servers to manage or patch

- Works out-of-the-box with AWS services and most popular third-party tools

- Supports parallel actions and separate environments like dev, staging, and prod

AWS CodeBuild compiles your code, runs tests, and packages your build artifacts. Also, It kicks in whenever there’s something to build, runs what you need, and exits cleanly once it’s done.

What stands out about AWS CodeBuild:

- No need to set up or maintain build servers

- Easily handles small projects and large-scale enterprise builds just the same

- Pricing is based on the length of your builds, so you only pay for actual usage.

AWS CodePipeline and CodeBuild form the foundation of AWS CI/CD pipelines. They remove manual steps, standardize workflows, and help teams focus on writing code that ships.

CI/CD Pipelines vs. AWS CodePipeline vs. AWS CodeBuild

We’ll now break down the three things that often get lumped together: the idea of CI/CD pipelines. And, the two AWS services that actually bring them to life are AWS CodePipeline and AWS CodeBuild.

The CI/CD pipeline symbolizes a flow from code commit to production. AWS CodePipeline acts as an orchestrator that controls the steps in that pipeline.

Ultimately, you have AWS CodeBuild, an engine that handles the code compilation, testing, and packaging.

Here’s how they stack up:

| Responsibility | CI/CD Pipeline (Concept) | AWS CodePipeline | AWS CodeBuild |

|---|---|---|---|

| Primary Function | Automate end-to-end delivery | Manage and coordinate pipeline stages | Build, test, and package application code |

| Focus Area | Process flow | Orchestration and automation | Compilation and testing |

| Triggers | Manual or automated | Event-based (e.g., code commits) | Triggered by CodePipeline or standalone |

| Tooling | Tool-agnostic | Integrates AWS and external tools | Native build support, custom environments |

| Scaling | Depends on tooling | Scales per pipeline configuration | Auto-scales based on load |

| Pricing Model | Tool-dependent | No cost (only for underlying services) | Pay per build minute |

You bring CodePipeline and CodeBuild together inside a well-designed CI/CD pipeline. As a result, you have a powerful, scalable setup that works across teams, AWS cloud computing services, and release cycles.

Streamlining CI/CD Pipelines with AWS CodePipeline and CodeBuild

If you want a deployment pipeline that actually keeps up with how fast your team ships code, you need to architect it with intention.

It is not about setting up a few tools and hoping they function as intended. In fact, it shows each step clearly, plugging in the right integrations, and turning manual effort into automated reliability.

Let’s walk through what that looks like when using AWS CodePipeline and AWS CodeBuild as the backbone of your AWS CI/CD pipelines.

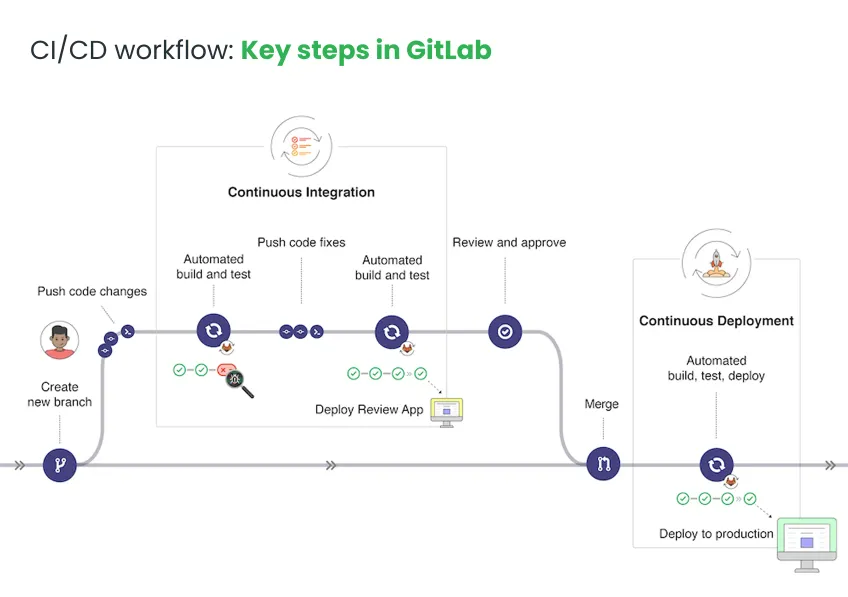

Designing CI/CD workflow

Before you start connecting tools, you need to map out your workflow. What happens when a developer pushes code? How does it move from source to staging to production? Every decision here shapes your automation.

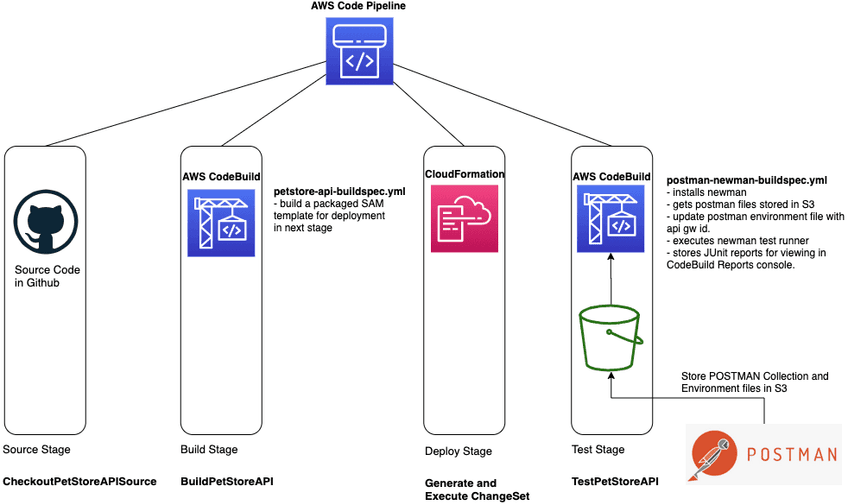

Define Pipeline Stages

Your CI/CD pipeline should be broken down into clear, logical stages that reflect how your team delivers software:

- Source: This is where the pipeline begins. Code sits in GitHub, CodeCommit, or Bitbucket. When there’s a commit or a pull request, CodePipeline picks it up.

- Build: CodeBuild compiles the source, runs tests, and produces artifacts. This stage can be split further into unit testing, integration testing, and packaging.

- Test: Not just pass/fail. Add security checks, code quality analysis, and contract testing for APIs.

- Deploy: Push to dev, QA, or production environments depending on rules and triggers.

Read more: Best Practices for AWS Cloud Architecture You should know

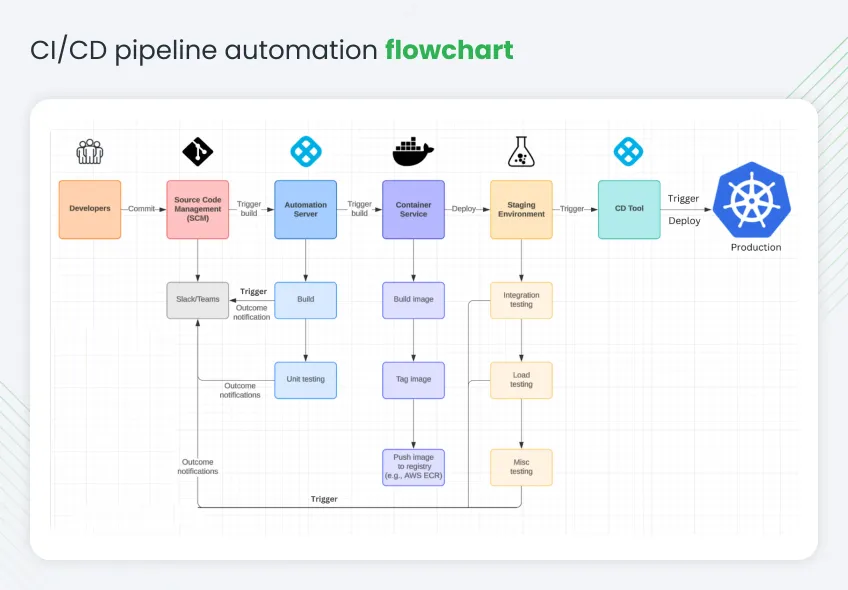

Identify integration points

No pipeline exists in a vacuum. Real-world builds almost always depend on multiple systems talking to each other.

Defining your integration points up front helps avoid bottlenecks and makes debugging easier.

- Pull requests trigger the pipeline: When someone raises a PR, the pipeline automatically runs tests and builds, improving quality from the start.

- Webhooks to third-party tools: Integrate tools like Jira, GitHub, or Datadog to receive and send data as part of your workflow.

- Slack or Teams updates when a stage is complete: Keep your team in the loop without switching dashboards to offer real-time notifications.

- Lambda functions to clean up resources after tests: Run small custom scripts post-build to deallocate infrastructure or reset configurations.

Select Event Triggers

You want your pipeline to respond to the right signals automatically. Triggers are how you keep the flow tight, timely, and reactive to change.

- Code pushed to a certain branch: Only trigger builds when the main, staging, or release branches are updated.

- A tag or version release: Automate versioned releases by responding to Git tags.

- Scheduled time-based deployments: Run builds or tests at regular intervals, ideal for QA validation or nightly builds.

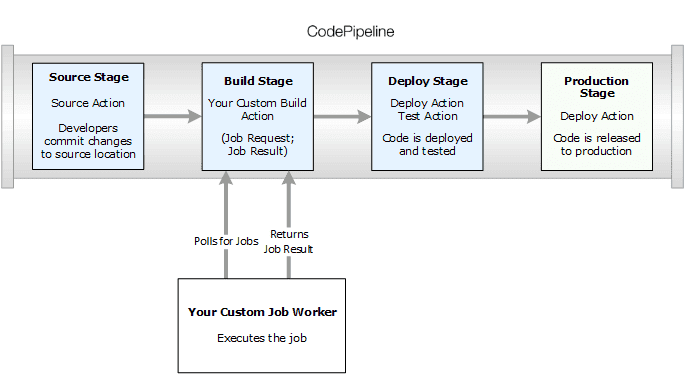

Configuring CodePipeline for automation

Once the flow is clear, set up AWS CodePipeline to control each step. This is where you decide how your automation actually behaves.

Integrate source repositories

Choose your source. If it’s GitHub, you can authenticate through OAuth. For CodeCommit, it’s native to AWS. The pipeline listens for changes and immediately initiates the workflow.

Add approval actions

You might not want everything to go out automatically. Add manual approvals where human judgment is required, like before pushing to production.

Deploy to multiple environments

Your CI/CD pipeline should be smart enough to handle the nuances of different environments.

Deploying to dev, staging, and production isn’t just about pushing the same thing everywhere; it's about customizing each deployment to its context and risk level.

- Development environments: Here, you want fast, frequent deployments. It’s okay if things break occasionally because this is your sandbox, so you must set minimal approval steps and prioritize speed.

- Staging environments: This is your dress rehearsal. Use it to mirror production as closely as possible. Add testing gates, environment variable overrides, and a manual approval before production.

- Production environments: This is where the stakes are highest. Your deployments should be safe, traceable, and auditable. Use IAM roles to control access, approval gates to validate readiness, and rollback mechanisms to handle failure gracefully.

Use CodePipeline custom actions

CodePipeline lets you define each of these environments as separate stages.

You can inject environment-specific parameters using Systems Manager Parameter Store and configure dynamic conditions to skip or include stages based on branch, time, or event.

- Run a Lambda function to trigger database seeding.

- Post messages to your team’s Slack channel.

- Run vulnerability scans before deployment.

Implementing CodeBuild for efficient builds

AWS CodeBuild is where things get done. It compiles your app, runs tests, and packages it into something ready for deployment.

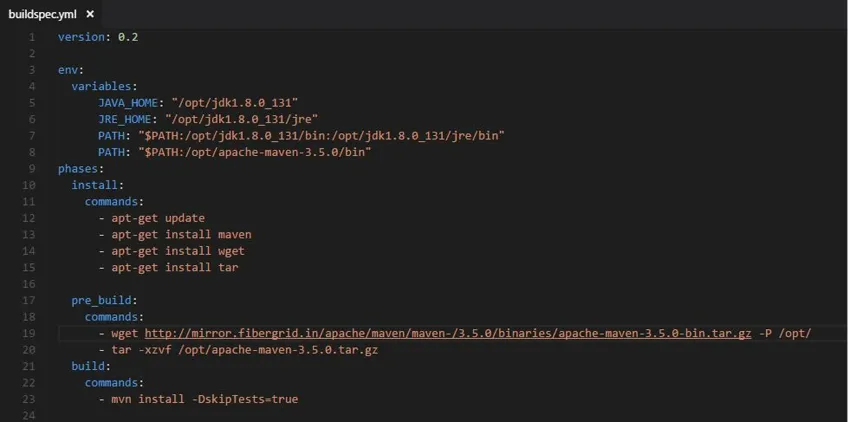

Configure buildspec.yml

This file is the brain behind every CodeBuild project. Think of it as a set of instructions that tells AWS exactly what to do with your code.

Define how the environment should be set up, what commands to run during the build, where the output artifacts should be saved, and even what to do if something goes wrong.

A good buildspec.yml can distinguish between a one-click deploy and hours of manual patchwork.

- Set environment variables: To keep sensitive data secure, you can hardcode them here or reference them from AWS Secrets Manager or Parameter Store.

- Add test commands: Specify everything from linting to unit and integration tests. These commands should be placed in the pre_build or build phases.

- Define where artifacts go: Use the artifacts block to point CodeBuild to the final package directory or Docker image. You can push these to Amazon S3 or ECR automatically..

Enable build caching and choose compute types

- Use local caching to avoid downloading dependencies on every run.

- Pick compute types based on workload. A general-purpose machine is fine for small apps. For data-heavy builds, go compute-optimized.

Integrate quality gates

Don’t let bad code slip through as you get to do the following:

- Use tools like SonarQube or ESLint to check code

- Integrate AWS Inspector or third-party scanners to catch vulnerabilities

- Fail the build if checks don’t pass

Manage Aartifacts

Send your finished build to Amazon S3 or Amazon ECR. This step ensures that every successful build is versioned, accessible, and traceable.

Monitoring and optimizing pipelines

Even the best pipelines need continuous tuning. Once your AWS CI/CD pipelines are in place, it's time to track their performance, catch issues early, and control both performance and cost.

Monitoring gives you the data, and optimization helps make the pipeline faster, safer, and more reliable.

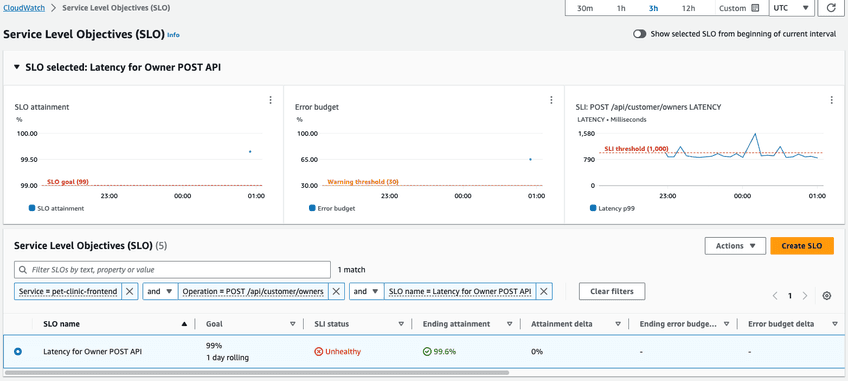

Monitor with CloudWatch

This is your window into pipeline health. AWS CloudWatch collects logs and metrics from every CodePipeline and CodeBuild stage.

- Track pipeline duration and failures: Identify bottlenecks or unusually long stages that can slow down releases.

- Visualize trends across builds: Use CloudWatch dashboards to monitor patterns over time, such as spikes in failures or degraded performance.

- Set alerts if builds take longer than expected: To catch issues early, create alarms based on thresholds for build duration or failure rates.

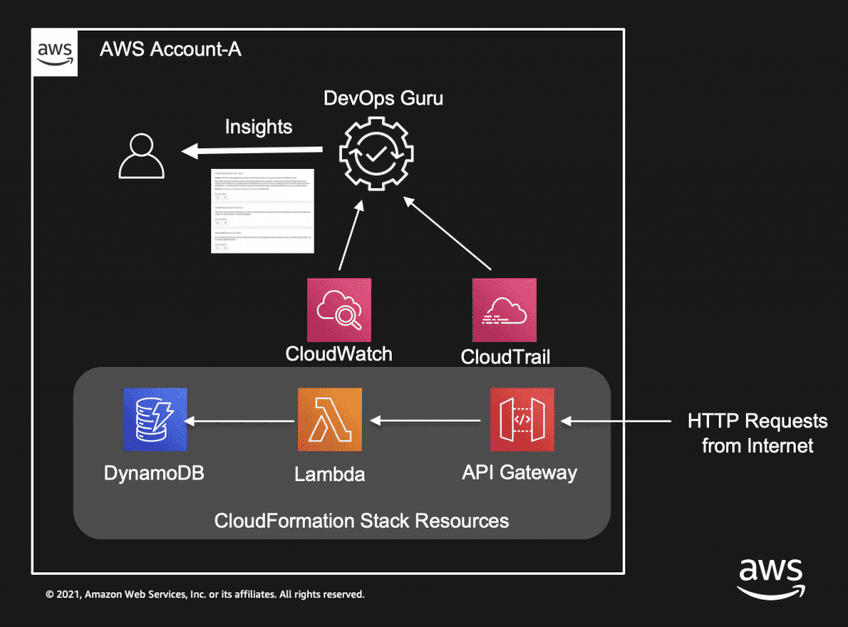

Add DevOps Guru

Amazon DevOps Guru incorporates machine learning. It analyzes metrics and logs across AWS services to find anomalies and suggest improvements.

- It spots unusual behavior: Whether it’s longer deployment times or recurring failures, it flags them without manual intervention.

- Recommends fixes or optimizations: Offers contextual guidance based on detected patterns.

- Works across services to tie together operational signals: Looks at CodePipeline, Lambda, RDS, and more to understand the bigger picture.

Track costs with AWS budgets and cost explorer

CI/CD can become expensive if not tracked. Cost monitoring helps avoid unexpected spikes and lets you optimize spending over time.

- Set budgets for CodeBuild usage: Define limits to avoid overruns, especially during large-scale testing phases.

- Monitor expensive stages: Identify which pipeline or build types consume the most resources.

- Use tagging to see costs by team or feature: Assign tags to projects or services to break down costs in a way that reflects internal reporting.

Read more: How AWS Managed services can help optimize your cloud costs?

Create dashboards for SLOs

You need visibility into service-level metrics to keep engineering and business goals aligned.

- Display release frequency: Track how often new code is deployed to production.

- Show lead time for changes: Measure how long it takes from code commit to live deployment.

- Track mean time to recovery (MTTR): Assess how quickly teams respond to failures.

- Align these to product goals: Create custom dashboards in Grafana or QuickSight to report progress and inform decision-making.

How streamlined CI/CD pipelines function with AWS CodePipeline and CodeBuild

When everything is wired correctly, a streamlined AWS CI/CD pipeline automates and transforms your entire development lifecycle.

Here's how it functions across critical areas:

Function 1: Automated code integration

Every time a developer commits code, AWS CodePipeline detects it, triggers a build with CodeBuild, and automatically moves it to staging. There is no need to nudge it along.

Use Case: AWS Microservices architectures involve multiple teams committing to changes daily. This setup keeps all components in sync and avoids bottlenecks caused by manual integrations.

Function 2: Continuous testing and validation

CodeBuild is responsible for compiling the code, executing defined test suites, and performing validation checks before any deployment step.

Use Case: Financial services run compliance and vulnerability checks before anything hits production. Think AWS Inspector, SonarQube, and automated QA tests.

Function 3: Artifact management and deployment

Once the build is complete, artifacts are shipped to S3 or Amazon ECR. Depending on the stack, they’re deployed via ECS, Lambda, or EC2.

Use Case: Manage container images for a Dockerized application and push new versions into ECR for ECS rollout.

Function 4: Rollback and recovery mechanisms

Deployments that fail mid-way are rolled back instantly, preserving stability. You can configure blue/green or canary strategies right in CodePipeline.

Use Case: SaaS platforms using blue/green deployments to reduce user disruption during updates.

Function 5: Cost optimization and resource scaling

CodeBuild automatically computes only when needed, and scales up or down depending on build volume.

Use Case: Companies managing fluctuating workloads, such as seasonal traffic surges or burst testing, can avoid paying for idle infrastructure.

Conclusion

AWS CI/CD pipelines powered by CodePipeline and CodeBuild allow you to go from slow, error-prone releases to a modern, automated delivery process. The combination of automated triggers, customized build logic, and real-time feedback loops means your teams spend less time managing infrastructure and more time shipping quality code.

Remember, these systems don’t just run builds; they enable continuous improvement. With every commit, test, deploy, and rollback loop, your development pipeline becomes more intelligent, cost-efficient, and scalable.

If you’re ready to stop firefighting pipeline failures and start building a trustworthy system, it might be time to rethink your CI/CD strategy with AWS.