Nowadays, Artificial Intelligence is being used in healthcare, fulfilling variety of purposes, like monitoring remote patients, analysing scans, or automating routine queries. But when an AI software works with patient data, it must meet the rules set by regulations like HIPAA and GDPR. These rules are crucial because they keep the patient’s sensitive data secure and also keep it unified across different organtizations of the country.

In short, HIPAA is a US regulation that protects patient health information by setting rules for how data is stored, accessed, and shared in healthcare systems. It applies to hospitals, clinics, insurers, and any software handling electronic health data in the USA.

GDPR, on the other hand, is a European law that governs how personal data is collected, processed, and used across all sectors, including healthcare.

While HIPAA focuses on health-specific data in the US, GDPR covers broader personal rights and applies to any organization handling EU citizens' data, no matter where the organization is based.

If you’re planning to build AI healthcare software for these countries, or if you are opting to choose an AI development company, then make sure to check their compliance expertise early on. This avoids costly rework and builds trust from day one.

Let’s dive into the key details for developing AI healthcare software that meets compliance requirements from the beginning.

Though, wondering which firm needs to follow these laws? Let’s find out.

Who needs to follow HIPAA & GDPR?

Any company that handles patient or personal health data may fall under HIPAA or GDPR, depending on where they operate and who they serve.

HIPAA applies to healthcare providers, insurers, and any vendor handling electronic health data in the United States. This includes AI software development companies building healthcare apps or managing medical records.

GDPR covers all organizations that collect or process personal data of EU citizens, regardless of where the company is based. If your AI system stores or analyzes health-related data from EU users, GDPR rules apply.

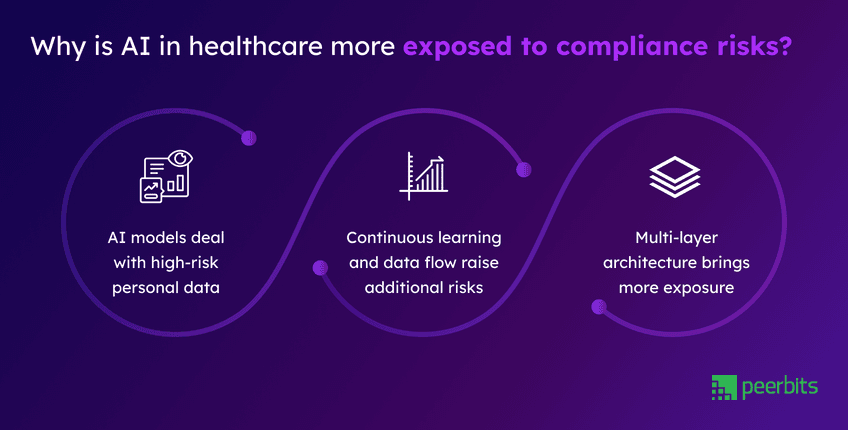

What makes AI in healthcare more sensitive to compliance risks?

AI in healthcare involves large volumes of personal and medical data moving across multiple systems. That raises risk, but when AI is involved, the way data is used, stored, and shared becomes harder to control. This makes compliance important at every layer.

AI models deal with high-risk personal data

Healthcare AI systems use data like patient histories, medical images, test results, and clinical notes. These fall under protected data categories defined in HIPAA and GDPR.

Most training pipelines depend on datasets that include PII or PHI(Patient Health Information), meaning the data is stored and used to train model behavior. Because of this, privacy risks can begin long before the product is live.

Read more: Quick guide on how to choose right AI model for your project

Continuous learning and data flow raise additional risks

Some AI systems are designed to keep learning over time, used in patient monitoring or diagnostics. New data comes in from users, devices, or third-party sources.

Without proper controls, this can lead to unnoticed data leaks or unclear decision paths. These are common compliance issues in healthcare systems built without auditability in mind.

Multi-layer architecture brings more exposure

Modern AI healthcare systems combine wearables, healthcare IoT-based monitoring for continuous care, edge computing, cloud infrastructure, and mobile apps. Data flows through all these components, creating several possible failure points.

If one part of the system lacks proper encryption or access controls, it can break overall compliance. This is why AI healthcare software must be planned as a whole rather than in isolated parts.

What HIPAA and GDPR expect from AI healthcare systems?

When you are building AI healthcare software, compliance affects more than just data storage. Both HIPAA(Health Insurance Portability and Accountability Act) and GDPR(General Data Protection Regulation) for AI systems define how personal health data can be used, especially when AI is involved in analysis or decision-making.

GDPR: Lawful basis, consent, data limits, and explanation rights

- GDPR requires a clear legal reason, known as a lawful basis, for using personal data.

- It expects you to collect only the necessary information, following the principle of data minimization.

- Consent must be clear, specific, and include how your AI healthcare system will use the data.

- A DPO is often needed when working with sensitive health records.

- The right to explanation means users can ask how a decision was made.

- If your model is a black box, you may need supporting features that explain outcomes.

HIPAA: Protecting ePHI, access rules, and third-party contracts

- HIPAA covers any system that handles ePHI from medical images to health scores.

- The privacy rule controls who can access data, while the security rule sets encryption and logging standards.

- If you work with outside vendors, you’ll also need a Business Associate Agreement (BAA) to hold them to the same rules.

- Together, these form the base requirements for building HIPAA compliant AI solutions.

What applies to AI systems under these laws?

- Even model outputs can be protected data if they reveal personal details. This often gets missed in early design.

- Consent also applies to how data is used in predictions or automated actions.

- If your system scores, flags, or responds to users based on input, that needs to be disclosed.

- For GDPR, the right to explanation limits how black-box models are used in AI healthcare compliance. Your design should allow transparency where needed.

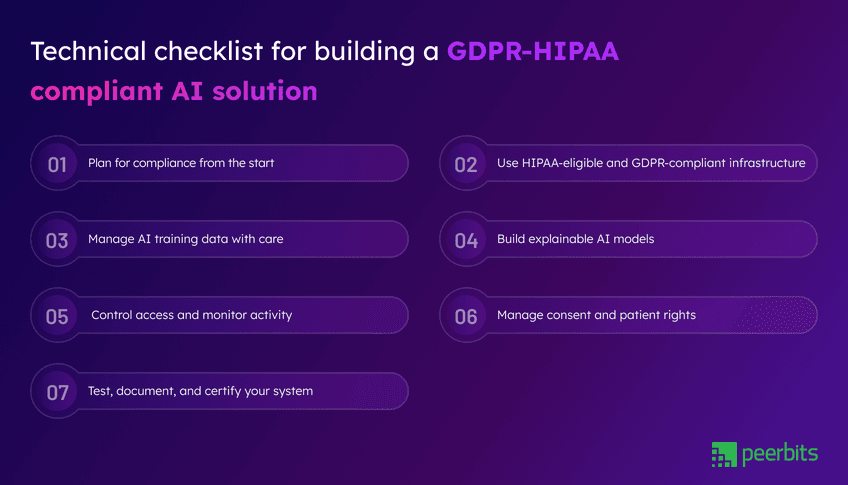

How to prepare your AI system for GDPR and HIPAA compliance?

When you are building an AI system for healthcare, planning for compliance needs to begin early. Each phase of your software lifecycle, such as design, development, training, and deployment, needs clear steps to meet privacy and security standards.

Below is a checklist that follows the flow of building such a system, keeping both GDPR and HIPAA in focus.

1. Plan for compliance from the start

Begin by mapping how patient data will move through your system. Identify each data source, how it is used, and where it is stored. This helps you maintain traceability and reduces confusion during audits.

Include your legal or privacy team early. If your organization has a Data Protection Officer, make sure they are part of system planning. Their input can prevent gaps and reduce rework later.

2. Use HIPAA-eligible and GDPR-compliant infrastructure

When using platforms like AWS, Azure, or GCP, choose services that support HIPAA and GDPR requirements. These platforms offer healthcare-ready features and region-specific settings to meet compliance needs.

Select regions based on your patient base, especially when GDPR rules apply. Understand which parts of compliance are your responsibility and which are handled by the cloud provider.

Use encrypted storage, secure transmission, firewalls, and IP filtering. Make sure activity logging and alerts are active to detect unusual behavior.

3. Manage AI training data with care

AI models often need medical data to learn. Before using any dataset, remove or replace details that could identify someone.

HIPAA calls this de-identification. GDPR prefers full anonymization. You can also use techniques like pseudonymization and split-key encryption to reduce risk.

Whether your system uses batch data or real-time input, keep it protected with strict access rules. Store datasets securely, log all usage, and keep a record of which data was used to train which model.

4. Build explainable AI models

In healthcare, professionals and patients must understand how AI in healthcare decisions are made. GDPR also gives individuals the right to ask for explanations when automated systems affect them.

Avoid black-box models when possible. Instead, use methods like LIME, SHAP, or rule-based logic that allow you to explain outcomes clearly.

Keep a record of each decision made by your system. This supports both audit readiness and safe use in medical settings.

5. Control access and monitor activity

Limit access to sensitive data by role. Use role-based access control to separate permissions for development, operations, support, and compliance teams.

Avoid shared accounts. Use named logins with clear access levels. All access should be logged in a way that cannot be edited or removed.

Set up real-time alerts for unusual activity. Review and update access lists regularly to stay in line with audit expectations.

6. Manage consent and patient rights

Consent must be clear, traceable, and easy to withdraw. Keep a record of when it was given and what it applies to. This is important when data is used in AI-driven decisions.

Your system should support patient requests for data access, transfer, or deletion. In AI systems, this may involve retraining models or applying data removal techniques.

Planning for this early helps meet privacy standards and builds trust with end users.

Before deployment, test your system in a secure environment where data flow, permissions, and alerts can be reviewed.

Include compliance checks in your CI/CD pipeline, so testing happens regularly.

Consider third-party audits and certifications like SOC 2, ISO 27001, or HITRUST. These help validate your setup and show that your product is ready for healthcare environments. Maintain updated documentation and a clear response plan for security issues.

Real-world examples of compliant AI use in healthcare

Some healthcare companies have already built systems that combine AI with strong privacy practices. These examples show how it's possible to follow HIPAA and GDPR rules while building useful tools for doctors and patients.

IBM Watson Health

IBM Watson Health handles large volumes of ePHI for cancer research and clinical decision support. Their system runs on HIPAA-compliant AI solutions, using secure infrastructure, access control, and de-identified datasets for training.

They maintain audit logs and review system access to meet ongoing compliance needs. This setup supports their goal of building AI healthcare software that works across hospitals and research centers.

Aidoc and Tempus

Aidoc and Tempus use AI to assist with medical imaging and diagnostics. Their tools work in real time and are built with privacy in mind.

They use HIPAA-compliant environments and include explainability features that help clinicians understand AI outputs. These examples show how AI in healthcare can be fast, reliable, and clear without ignoring compliance rules.

Babylon Health

Babylon Health offers digital consultations in Europe, focusing on GDPR-compliant AI systems. The platform gives users full control over their data with consent tools and transparency about how AI decisions are made.

They apply AI in healthcare compliance standards by supporting patient rights, like data access and explanation. Their explainable AI design helps meet the GDPR requirement for clear, traceable decisions.

Common mistakes of AI healthcare teams with compliance

Even experienced teams can miss key compliance steps while building healthcare AI systems. These common gaps often lead to rework, delays, or audit failures. Planning for these early helps avoid risk later.

Retrofitting compliance after the MVP

Many teams focus on building the minimum product first and add compliance later. But once data pipelines, models, and workflows are in place, it becomes harder to apply controls without breaking the system.

Planning for AI healthcare compliance from the start, even in early prototypes, helps avoid large changes and unexpected costs later in development. It also builds trust with hospitals and partners early on.

Overlooking edge device risks

Systems that use wearables, home monitors, or bedside sensors often skip deeper checks for data security at the device level. These IoT components introduce new paths for personal data to move without proper control.

If edge devices collect or process patient data, they fall under healthcare AI regulations. Weakness at this layer can lead to serious compliance violations, under HIPAA.

Gaps in decision logging and access control

AI decisions need to be traceable. If your system recommends treatment or flags a medical risk, there should be a record of what data was used and why the output was generated.

Many systems also miss access granularity. Teams use shared accounts or open access policies during testing, and forget to fix them before production. This creates audit risks and weakens your HIPAA compliant AI solution.

Build compliant-ready AI Healthcare systems with Peerbits

Building compliant healthcare AI products requires more than ticking off security tasks. You need the right structure, privacy-focused design, and audit-ready records from the start. Our team supports all of this from early planning to deployment.

Full-stack development for GDPR and HIPAA-aligned AI

We work with healthcare companies to design and deliver full-stack AI healthcare software development. From backend systems to patient-facing apps, every layer is built with privacy, access control, and traceability in mind.

Our AI consulting services include technical reviews, data handling strategies, and compliance-focused design sessions. These are tailored for teams that want to reduce risk while keeping delivery timelines on track.

Secure hosting for healthcare AI models

Once your model is ready, we help you deploy it using HIPAA-compliant AI solutions hosted on AWS. Data in these environments is encrypted and managed under strict access policies.

We support region selection for GDPR, enforce logging at all layers, and configure alerts for suspicious activity. Our setup helps you meet both operational goals and legal requirements.

The goal is to let your models run securely while maintaining control over patient data. This is important for those applying AI in healthcare compliance tools across clinical workflows or monitoring systems.

Conclusion

Building AI systems for healthcare is about accuracy and speed infused with privacy, security, and accountability. Whether you are working with remote patient monitoring tools, diagnostic models, or health chatbots, aligning with HIPAA and GDPR is crucial. It needs to be built into every step, from infrastructure choices to how your model decisions are explained.

Compliance mistakes are easier to prevent when they’re considered from the start. That’s why planning your data flow, model behavior, and consent logic early can save months of revision later.

If you’re looking for a trusted AI software development company that understands both healthcare and regulatory needs, we can support your team across architecture, development, and deployment.

FAQs

Using such data may violate GDPR or HIPAA. It's safer to anonymize it, verify its source, or replace it with compliant datasets.

Every major update or on a regular schedule, like quarterly. This keeps your system aligned with any changes.

Yes. With the right planning and external guidance, startups can meet compliance needs effectively.

No. You still need to manage access control, consent, data use, and monitoring within your app.

Keep access logs, consent records, system documentation, and model decision traces ready for review.

Yes. You can use EHR (Electronic Health Record) add-ons, third-party APIs, or build custom modules tied to patient portals.